Run library(“xgboost”) in the new notebook. To create a new notebook for the R language, in the Jupyter Notebook menu, select New, then select R. Select Open with Jupyter Notebook option. After installation, click again on the arrow right to R. Follow the instructions to complete the installation. From the dropdown menu, select Open Terminal. Next to Packages, select Python version (At this time, Python 3.8) and R. To install the R language and r-essentials packages, select Environments to create a new environment. To install and run R in a Jupyter Notebook: You can also use XGBoost with the R programming language in Jupyter Notebook. Copy conda install -c conda-forge xgboost. Now you’re all set to use the XGBoost package in Python If there is no error, you have successfully installed the XGBoost package for Python. Form the Jupyter homepage, open a Python 3 notebook and run

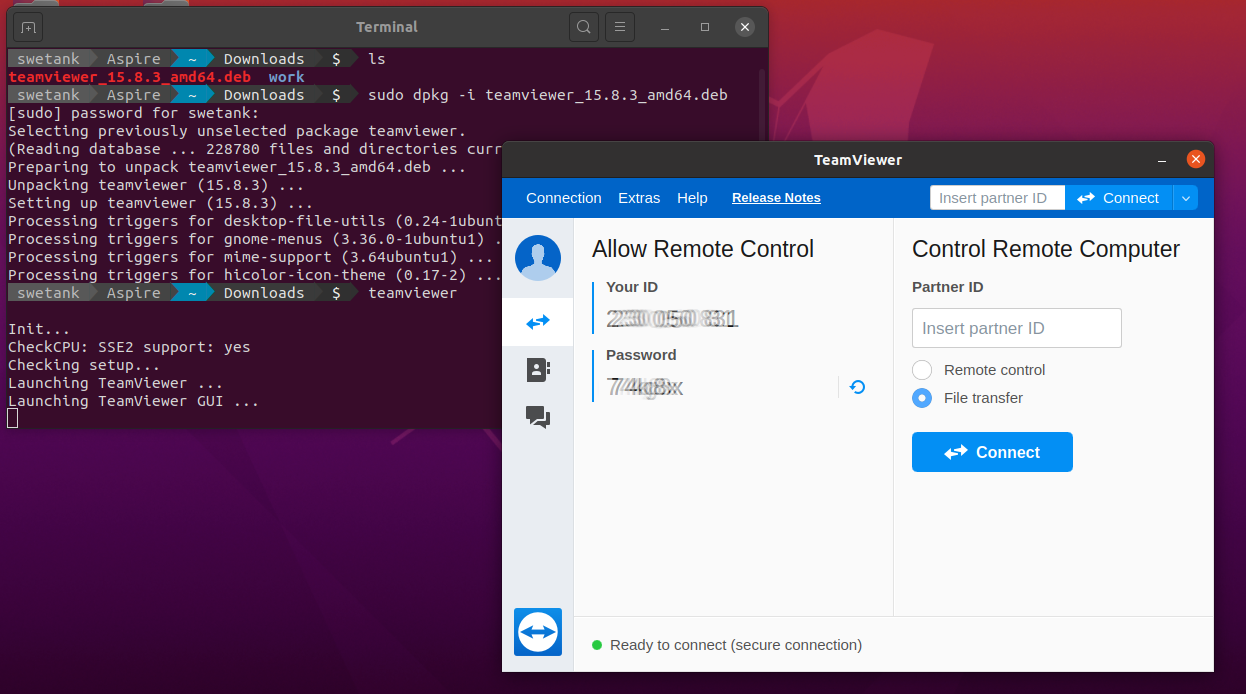

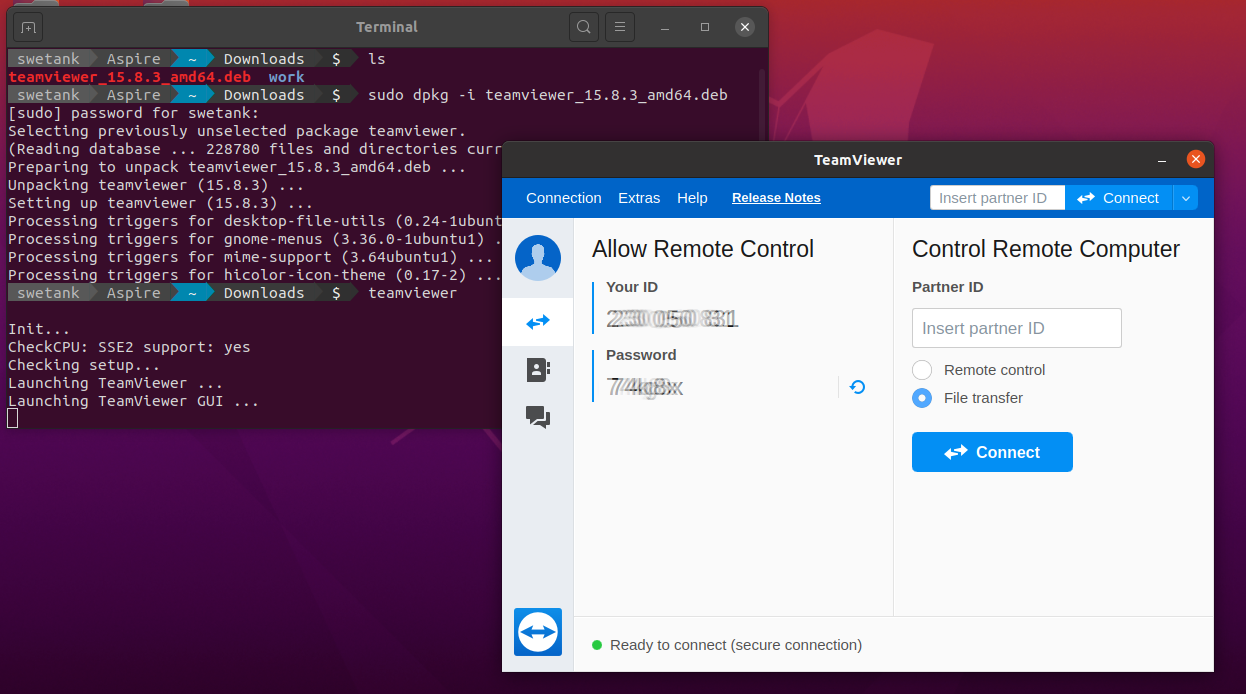

Now launch the Jupyter Notebook through Anaconda Navigator. Install XGBoost through Anaconda Terminal (Image by author)

After downloading the setup file, double click on it and follow the on-screen instructions to install Anaconda on your local machine. All you need to do is run the relevant command through the Anaconda terminal. It also provides the facility to install new libraries. It includes all the things: hundreds of packages, IDEs, package manager, navigator and much more. It is the most preferred distribution of Python and R for data science. For Python and R users, the simplest way to get Python, R and other data science libraries is to install them through Anaconda. It is necessary to set up your local machine before doing any machine learning task. Polynomial Regression with a Machine Learning Pipeline. k-fold cross-validation explained in plain English. Random forests - An ensemble of decision trees. Train a regression model using a decision tree. You can also refresh your memory by reading the following contents previously written by me. It is recommended to have a good knowledge and understanding of machine learning techniques such as cross-validation, machine learning pipelines, etc and algorithms such as decision trees, random forests, etc. I assume that you are already familiar with popular Python libraries such as numpy, pandas, scikit-learn, etc and using Jupyter Notebook and RStudio. Milestone 7: Building a pipeline with XGBoost. Milestone 6: Get your data ready for XGBoost.

Milestone 5: XGBoost’s hyperparameters tuning.Milestone 4: Evaluating your XGBoost model through cross-validation.

Milestone 2: Classification with XGBoost. I will occasionally use R where important. When implementing the algorithm, the default programming language is Python. When you complete the journey through all milestones, you will have a good knowledge and hands-on experience (implementing the algorithm effectively using R and Python) in the followings. Here, each subtopic is called a milestone. We discuss the entire topic step by step. It is like a journey, maybe a long journey for newcomers.

Milestone 2: Classification with XGBoost. I will occasionally use R where important. When implementing the algorithm, the default programming language is Python. When you complete the journey through all milestones, you will have a good knowledge and hands-on experience (implementing the algorithm effectively using R and Python) in the followings. Here, each subtopic is called a milestone. We discuss the entire topic step by step. It is like a journey, maybe a long journey for newcomers. #XGBOOST INSTALL LINUX SERIES#

The topic we are discussing is broad and important so that we discuss it through a series of articles. Most people say XGBoost is a money-making algorithm because it easily outperforms any other algorithms, gives the best possible scores and helps its users to claim luxury cash prizes from data science competitions. Welcome to another article series! This time, we are discussing XGBoost (Extreme Gradient Boosting) - The leading and the most preferred machine learning algorithm among data scientists in the 21st century.

0 kommentar(er)

0 kommentar(er)